Explore by Category:

Performance

Caching on production

I’ve got recently a few questions regarding the best caching strategies for Alokai on production. We’ve got the production setup options explained in our docs but the caching part was apparently missed. That’s why I decided to put a few words on this topic. Here we go!

SSR Output Cache

Alokai supports a very efficient Server Side Rendering cache. In this mode, the generated HTML pages are fully stored in the Redis cache. The cache entries are then tagged with all the products, categories (and potentially other data entities) used to render that specific page.

Let’s say the Category page contains 20 products products = [{ id: 120, ... }, { id: 324, ... }, ...] after this specific category page got rendered, Alokai would tag the output cache entry with the following tags: P120, P324, C56 (… if the category id was 56).

Each time, Alokai indexers (both: mage2vuestorefront and magento2-vsbridge-indexer) are pushing the changes for any of the tagged product to Elastic storage, the indexer is calling an URL like: https://your-instance.com/invalidate?tags=P120. This request invalidates cache, assigned to this specific product. There is no risk the user will get any outdated product information.

Typical cached requests take about 2–50ms to execute — compared to about 100ms for non-cached ones.

Hint: When you’re dealing with a memory-leak ( for example you’ve used the mixins in the Vue.js theme ), the SSR cache can be very helpful. Maybe not eliminate the leak but rather to … live with it. By just limiting the memory usage as the number of dynamic requests processed by Vue.js got significantly lower with cache on.

API output cache

Although the SSR cache is pretty cool — even when it’s enabled, the cache hit ratio still would be pretty low. It’s because most of the requests are executed in CSR (client-side mode), querying the API directly from the client's browser. If you’re facing any Elastic performance issues or it’s high on resources that you’d like to limit— it’s a time to introduce the API output cache.

We’re recommending to put an nginx or varnish proxy in front of vue-storefront-api. With such a proxy, adding the API cache is pretty easy.

Note: Most of the API requests are executed using POST method. Before VS 1.8, even the api/catalog requests were POST. Here is a short tutorial on how to enable the POST requests cache in Varnish and Nginx

For nginx the cache declaration is pretty simple:

location /api/ {

proxy_pass https://vue-storefront-api:8080/api/;

}location /api/catalog/ {

proxy_pass https://vue-storefront-api:8080/api/catalog/;

proxy_cache static;

proxy_cache_valid 200 1h;

proxy_cache_methods GET HEAD POST;

proxy_cache_key "$scheme$proxy_host$request_uri|$request_body";

}Note: StorefrontCloud.io uses the API output cache along with the Amazon CDN as a default configuration — getting pretty decent performance results .

Dynamic API output caching

From the Alokai 1.11 (currently, experimental release) there will be an option to use Dynamic Output Caching. With this model on the output cache from the API will be tagged with dynamic tags corresponding to all the products and categories which are included in the search results.

If the product with ID = 123 exists on the results page API will tag the output with a P123 tag. All of our official indexers magento*-vsbridge-indexer and mage2vuestorefront do support the dynamic cache invalidation. This means that the output cache will be invalidated page by page whatever any product stock or category information changed. Read more on the Output cache in the Pull Request.

Stock and prices

With the API cache enabled, even with lower TTLs (like 1m) there is a chance that the prices and stock information got desynchronized with the backend. That could be a potentially risky situation from a business perspective.

To handle this issue we implemented following mechanisms:

- Alokai is calling vue-storefront/api/stock/check which is querying the eCommerce backend (let’s say Magento) each time when the product is: being added to the cart, updated in the cart, the user is entering the Checkout. Then the Cart synchronization mechanism is checking the stock for the second time. The last check is executed by sending out the order to the backend (user gets an error in case of any unavailable products in the cart). I can say that it’s almost impossible to sell the product which is out of the stock. In the case of de synchronization, the only downside is that the user is seeing Add to cart button and will get the error message after clicking it. This can be also avoided by enabling the config.products.filterUnavailableVariants. In that case, the additional api/stock/check will be called by entering the product page

- Alokai can query eCommerce backend (eg. Magento) for the current prices each time the user enters category/product page. By not skipping cached pricing data from Elastic the risk of wrong pricing presented to the customer is minimal. Whats more: the cart totals are always calculated server side with the current product prices. That means the user will surely see the correct prices before accepting the final order. Please read more about the always SyncPlatformPricesOver mode .

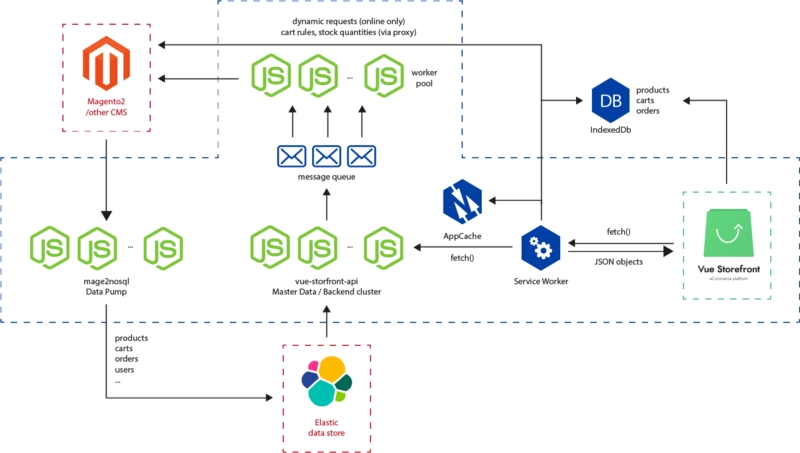

Service Worker

Before the 1.11 release, we were using LocalStorage or IndexedDB to cache the products and categories for the offline mode purposes. It was because, not all the browsers (iOS) were supporting Service Workers. However, it got changed! From 1.11 we’re using solely Service Workers.

The Service Workers cache works like we’re always executing the backend requests to invalidate the local cache. It’s the NetworkFirst mode. That means there is virtually no risk of stale / non-invalidated data served from the local cache.

Hint: If you’re on Alokai ≤ 1.10 and your client’s are facing problems with LocalStorage quota exceeded .

Images and static content cache

From 1.10 release the vue-storefront-api image resizer supports an exchangeable caching strategy (local filesystem, Google Cloud, custom …).

Note: Please make sure your static content — including images — is being served with proper HTTP headers set in order to be cached efficiently in the browser.

Summary

Although the performance of Alokai and Elastic is pretty high out of the box, we encourage you to enable the SSR and API cache on production. Please note that it always requires some manual testing just to make sure all the business cases have no regressions.

Share:

Share:

More in Performance

Ready to dive in? Schedule a demo

Get a live, personalised demo with one of our awesome product specialists.